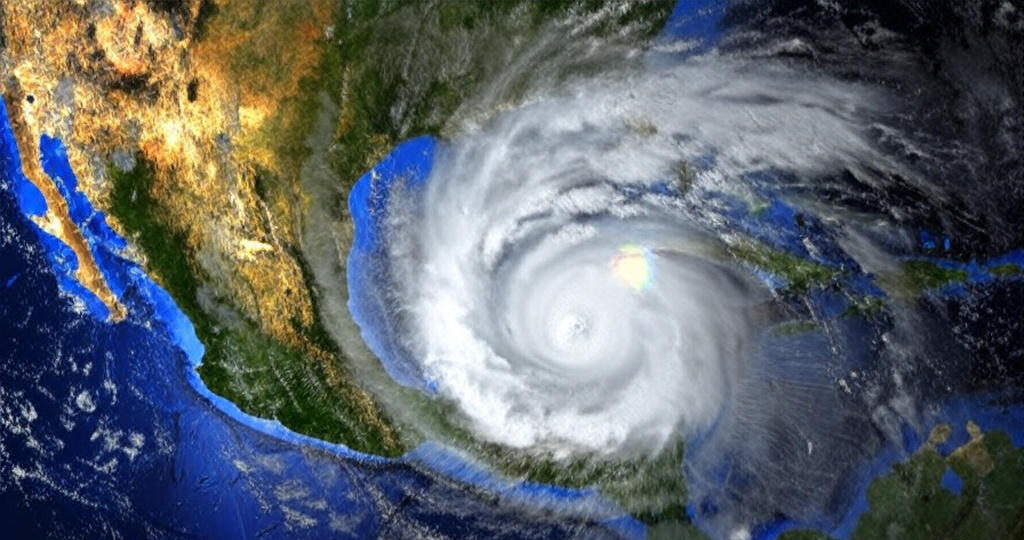

In the face of escalating climate challenges, deep learning technologies are transforming the landscape of climate modeling, delivering unprecedented accuracy in predictions that guide global policy and adaptation strategies. As of September 2025, advancements in artificial intelligence (AI) have enabled models to process vast datasets with remarkable precision, outperforming traditional methods in forecasting temperature shifts, precipitation patterns, and extreme weather events. This surge in reliability stems from hybrid AI-physics approaches and enhanced computational efficiency, offering policymakers and scientists tools to mitigate risks more effectively. With climate impacts intensifying, these innovations underscore the critical role of AI in fostering sustainable futures.

Key Advancements in Deep Learning for Climate Prediction

Deep learning has emerged as a cornerstone in refining climate models, addressing longstanding limitations in resolution and computational demands. Traditional general circulation models (GCMs) often struggle with coarse spatial scales, but AI integrations now enable fine-grained simulations essential for regional planning.

NeuralGCM: Speed and Precision Redefined

One of the most notable breakthroughs is Google’s NeuralGCM, a hybrid model blending machine learning with physics-based simulations. Launched in early 2025, NeuralGCM simulates atmospheric dynamics 100,000 times faster than conventional systems like X-SHiELD, while maintaining comparable accuracy in long-term climate projections. This efficiency, equivalent to 25 years of high-performance computing progress, allows researchers to run scenarios on standard laptops rather than supercomputers.

- Computational Gains: Reduces simulation time from weeks to minutes, democratizing access for global research teams.

- Accuracy Benchmarks: Matches state-of-the-art models in temperature and wind predictions, with open-source code on GitHub fostering collaborative enhancements.

- Applications: Ideal for exploring aerosol impacts and extreme precipitation, areas previously hindered by resource constraints.

Super-Resolution Techniques for Temperature Downscaling

Deep learning super-resolution models, such as Enhanced Deep Super-Resolution (EDSR) and Very Deep Super-Resolution (VDSR), have revolutionized temperature data downscaling. A comprehensive 2025 study downscaled ERA5 data from 250 km to 25 km resolution, capturing nuances in complex terrains like the Himalayas. Residual networks in these models improved structural similarity index (SSIM) and peak signal-to-noise ratio (PSNR), outperforming shallower convolutional neural networks (CNNs) by up to 15% in prediction accuracy.

These techniques are particularly vital for urban planners assessing heatwave vulnerabilities, where fine-scale data informs resilient infrastructure design.

Balancing Simplicity and Complexity in Model Performance

While deep learning promises transformative insights, recent analyses reveal that simpler models can sometimes eclipse complex AI in specific tasks, highlighting the need for tailored benchmarking.

When Linear Models Outperform AI

An MIT-led study published in August 2025 in the Journal of Advances in Modeling Earth Systems compared linear pattern scaling (LPS) against deep learning emulators using the ClimateBench dataset. Surprisingly, LPS surpassed AI in predicting 75% of atmospheric variables, including regional temperatures and precipitation, due to AI’s susceptibility to overfitting on internal climate variability. This underscores a key lesson: simpler physics-based methods provide robust baselines, especially for ensemble-mean forecasts.

Hybrid Approaches: The Path Forward

Hybrid models mitigate these pitfalls by fusing AI’s pattern recognition with physical consistency. For instance, convolutional neural networks (CNNs) combined with long short-term memory (LSTM) units achieved superior monthly climate forecasts in Jinan, China, with reduced root mean square error (RMSE) compared to standalone dynamical models. Future iterations, incorporating transfer learning and bidirectional LSTMs, could further elevate accuracy for multivariate predictions.

- Bias Correction: AI post-processes GCM outputs, enhancing reliability for extremes like droughts.

- Interpretability Tools: Techniques like SHAP and Grad-CAM demystify “black box” decisions, building trust among stakeholders.

- Equity Integration: Models now embed socioeconomic data, prioritizing vulnerable regions in resource allocation.

Challenges and Future Directions in AI-Driven Climate Modeling

Despite these strides, hurdles persist in data sparsity, model interpretability, and extrapolation to unprecedented scenarios.

Addressing Data and Ethical Gaps

Arctic regions exemplify data challenges, where sparse observations limit AI training. Recent X discussions highlight deep learning’s 98% accuracy in sea ice predictions but call for innovative sparsity solutions, such as retrieval augmentation from historical analogs. Ethically, ensuring equitable AI deployment remains paramount, as biases in training data could exacerbate global disparities.

Emerging Horizons for 2026 and Beyond

Looking ahead, foundation models like TianXing promise linear-complexity attention mechanisms, slashing GPU demands with minimal accuracy trade-offs. Workshops at NeurIPS 2025 signal a push toward interpretable hybrids for extremes, potentially integrating causal discovery for deeper process understanding. As climate urgency mounts, these evolutions will empower proactive, just transitions.

In summary, 2025 marks a pivotal year for deep learning in climate modeling, where accuracy gains propel actionable science. By harnessing these tools judiciously, humanity can navigate environmental uncertainties toward resilience and equity.